See my article on the company blog for a discussion on this, and a how to on using Fail2ban to help stop these attacks.

Category: Linux

SIP Brute Force Attacks on the Increase

On our own Asterisk PBX server for our office and on some customer boxes with open SIP ports, we have seen a dramatic rise in brute force SIP attacks.

They all follow a very common pattern – just over 41,000 login attempts on common extensions such as 200, 201, 202, etc. We were even asked to provide some consultancy about two weeks ago for a company using an Asterisk PBX who saw strange (irregular) calls to African countries.

They were one example of such a brute force attack succeeding because of a common mistake:

- an open SIP port with:

- common extensions with:

- a bad password.

(1) is often unavoidable. (2) can be mitigated by not using the predictable three of four digit extension as the username. (3) is inexcusable. We’ve even seen entries such as extension 201, username 201, password 201. The password should always be a random string mixing alphanumeric characters. A good recipe for generating these passwords is to use openssl as follows:

openssl rand -base64 12

Users should never be allowed to choose their own and dictionary words should not be chosen. The brute force attack tried >41k common passwords.

Preventing or Mitigating These Attacks

You can mitigate against these attacks by putting external SIP users into dedicated contexts which limit the kinds of calls they can make (internal only, local and national, etc); ask for a PIN for international calls; limit time and cost; etc.

However, the above might be a lot of work when simply blocking users after a number of failed attempts can be much easier and more effective. Fail2ban is a tool which can scan log files like /var/log/asterisk/full and firewall IP addresses that makes too many failed authentication attempts.

See VoIP-Info.org for generic instructions or below for a quick recipe to get it running on Debian Lenny.

Quick Install for Fail2ban with Asterisk SIP on Debian Lenny

-

apt-get install fail2ban

- Create a file called

/etc/fail2ban/filter.d/asterisk.confwith the following (thanks to this page): - Put the following in

/etc/fail2ban/jail.local:

- Edit

/etc/asterisk/logger.confsuch that the date format under[general]reads:dateformat=%F %T

- Also in

/etc/asterisk/logger.conf, ensure full logging is enable with a line such as the following under[logfiles]:full => notice,warning,error,debug,verbose

- Resart / reload Asterisk

- Start Fail2ban:

/etc/init.d/fail2ban startand check:- Fail2ban’s log at

/etc/log/fail2ban.logfor:INFO Jail 'asterisk-iptables' started

- The output of

iptables --list -vfor something like:Chain fail2ban-ASTERISK (1 references) pkts bytes target prot opt in out source destination 227 28683 RETURN all -- any any anywhere anywhere

- Fail2ban’s log at

You should now receive emails (assuming you replaced the example addresses above with your own and your MTA is correctly configured) when Fail2ban starts or stops and when a host is blocked.

Link: MySQL Best Practices

I came across this site today which has some good advice for MySQL. I’m particularly happy to see that Doctrine, a relatively new ORM for PHP which we’re big fans of, is gaining some traction.

I came across this site today which has some good advice for MySQL. I’m particularly happy to see that Doctrine, a relatively new ORM for PHP which we’re big fans of, is gaining some traction.

I also noticed that Piwik, an open source analytics package, are using some interesting quality assurance tools which may be of interest to PHP developers (along with a continuous integration tool I came across recently: phpUnderControl).

Encoding Video for the HTC Desire

A useful script to encode all files passed as parameters(s) for viewing on a HTC Desire.

While I’m writing about video encoding, another task I did recently was encode a load of video files for my HTC Desire (a handset I’d strongly recommend for anyone). The main reason being that I like to watch something while pounding the threadmill in the gym.

A useful script to encode all files passed as parameters(s) (must all end in .avi) is:

#! /bin/bash

src="$*"

dst="_${*%%avi}mp4"

echo -en "Encoding $src\t\t\tPASS1"

ffmpeg -b 600kb -i "$src" -v 0 -pass 1 -passlogfile FF -vb 600Kb \

-r 25 -an -threads 2 -y "$dst" /dev/null

echo -e "\tPASS2"

ffmpeg -b 600kb -i "$src" -v 0 -pass 2 -passlogfile FF -vb 600Kb \

-r 25 -threads 2 -y -vol 1536 "$dst" /dev/null

rm FF-0.log

Encoding Full HD as FLV (for Gallery3)

I have a full HD camcorder and I wanted to stick some good quality video on my gallery for relatives to view. So, I needed to convert my sample 100MB MP4 full HD file to a suitably sized FLV for the Gallery. Here’s what I did…

I have a very nice Samsung R10 Full HD Camcorder which I bought last year. After a recent family holiday, I wanted to stick some good quality video on my gallery for relatives to view. The gallery is RC2 of the excellent Gallery 3 package which uses another excellent open source tool called Flow Player to play movies.

So, I needed to convert my test 100MB MP4 full HD file to a suitably sized FLV for the Gallery. My initial attempts with ffmpeg worked fine but the quality (sample) was very poor and changing the bit rate in different ways seemed to make no difference:

ffmpeg -i HDV_0056.MP4 -vb 600k -s vga -ar 22050 -y Test.flv ffmpeg -i HDV_0056.MP4 -b 600k -s vga -ar 22050 -y Test.flv ffmpeg -i HDV_0056.MP4 -vb 600k -s vga -ar 22050 -y Test.flv

I then turned to x264 and broke the process down to a number of stages:

- Extract the raw video to YUV4MPEG (this creates a 7GB file from my 100MB MP4):

ffmpeg -i HDV_0056.MP4 HDV_0056.y4m

- Encode the video component to H.264/FLV at the specified bit rate with good quality:

x264 --pass 1 --preset veryslow --threads 0 --bitrate 4000 \ -o HDV_0056.flv HDV_0056.y4m x264 --pass 2 --preset veryslow --threads 0 --bitrate 4000 \ -o HDV_0056.flv HDV_0056.y4mNote that I’m using the veryslow preset which is… very slow! You can use other presets as explained in the

x264man page. - Extract and convert the audio component to MP3 (the sample rate is important):

ffmpeg -i HDV_0056.MP4 -vn -ar 22050 HDV_0056.mp3

- Merge the converted audio and video back together:

ffmpeg -i HDV_0056.flv -i HDV_0056.mp3 -acodec copy \ -vcodec copy -y FullSizeVideo.flvThis yields a near perfect encoding at 22MB. It’s still full size though (HD at 1920×1080).

- The last step is to then use ffmpeg to resize the video and it now seems to respect bit rate parameters:

ffmpeg -i FullSizeVideo.flv -s vga -b 2000k \ -vb 2000k SmallSizeVideo.flv

The resultant video can be seen here.

Robert Swain has a useful guide for ffmpeg x264 encoding.

perl: warning: Falling back to the standard locale (“Câ€)

Every time I debootstrap a new Debian server for a XenU domain, I get lots of verbose output from Perl scripts:

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LANG = "en_IE.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to the standard locale ("C").

The fix is simple:

# apt-get install locales # dpkg-reconfigure locales

and select your locales.

Kubuntu 8.10 and Mobile Broadband (and KDE 4.1)

Kubuntu 8.10 and mobile broadband – the KNetworkManager has come a long way!

I updated my laptop from Kubuntu 8.04 to 8.10 (just released) yesterday. I do 90% of my work on my desktop which needs to just work and, as such, it’s running Kubuntu 7.10. My laptop, however, I play around with.

Most people’s first impression of 8.10 will be based on the upgrade process and post install issues. To date, I’ve always had to fix a lot of problems with the system after an upgrade to make it work. Not this time – it was absolutely seamless.

I was also apprehensive about KDE 4.1 and, to be honest, I was really worried that in a crunch I’d have to fall back to Gnome before degrading back to 8.04. I just don’t have the time these days to follow KDE development as much as I used to and I briefly installed KDE 4 a few months ago and thought it was far from finished.

I’m delighted to report KDE 4.1 is very slick and very polished. I’ve only had it for just over 24 but I have no complaints yet.

However, my main motivation for the upgrade was mobile broadband. Like most people, I use my laptop when on the move and my desktop when in the office. My laptop has an Ethernet port and a wi-fi card which both worked great with KNetworkManager but not mobile broadband. I got O2’s broadband dongle (the small USB stick) about four months ago and rely on it heavily.

I’ve been using Vodafone’s Mobile Connect Client to great effect but there were some issues:

- setting up the connection was a manual process (change X window access control; su to root; export the

DISPLAYsetting; and start the application); - if I suspended the laptop then I needed to reboot the system to use the dongle again.

While both of the above could be solved, it’s just not plug and play. 8.10 is. With the dongle plugged into the USB port, KNetworkManager discovered the tty port. Configuring it was as easy as right clicking on the KNetworkManager icon and selecting New Connection… icon for the tty port.

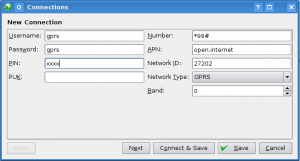

The next step requires knowledge of the O2 / provider settings but this is readily available online. For O2:

After the above, I just accepted the defaults for the rest of the options. And – to my delight – it just worked. And it worked after suspended the laptop. And after popping the USB dongle in and out for the heck of it. By clicking the Auto Connect option as part of the process, it also just works when I pop the dongle in.

chan_ss7, pcap files and 64bit machines

UPDATE: April 29th 2008

Anders Baekgaard of Dicea ApS (current chan_ss7 maintainers) recommends the following alternative patch. Please note that mtp3d.c will also need to be patched in the same way:

--- chan_ss7.c~ 2008-04-03 09:23:56.000000000 +0200

+++ chan_ss7.c 2008-04-29 08:29:20.000000000 +0200

@@ -249,11 +249,12 @@

static void dump_pcap(FILE *f, struct mtp_event *event)

{

+ unsigned int sec = event->dump.stamp.tv_sec;

unsigned int usec = event->dump.stamp.tv_usec -

(event->dump.stamp.tv_usec % 1000) +

event->dump.slinkno*2 + /* encode link number in usecs */

event->dump.out /* encode direction in/out */;

- fwrite(&event->dump.stamp.tv_sec, sizeof(event->dump.stamp.tv_sec), 1, f);

+ fwrite(&sec, sizeof(sec), 1, f);

fwrite(&usec, sizeof(usec), 1, f);

fwrite(&event->len, sizeof(event->len), 1, f); /* number of bytes of packet in file */

fwrite(&event->len, sizeof(event->len), 1, f); /* actual length of packet */

END UPDATE: April 29th 2008

A quickie for the Google trolls:

While trying to debug some SS7 Nature of Address (NAI) indication issues, I needed to use chan_ss7’s ‘dump’ feature from the Asterisk CLI. It worked fine but the resultant pcap files always failed with messages like:

# tshark -r /tmp/now tshark: "/tmp/now" appears to be damaged or corrupt. (pcap: File has 409000-byte packet, bigger than maximum of 65535)

After much digging about and head-against-wall banging, I discovered the issue

is with the packet header in the pcap file. It’s defined by its spec to be:

typedef struct pcaprec_hdr_s {

guint32 ts_sec; /* timestamp seconds */

guint32 ts_usec; /* timestamp microseconds */

guint32 incl_len; /* number of octets of packet saved in file */

guint32 orig_len; /* actual length of packet */

} pcaprec_hdr_t;

chan_ss7 uses the timeval struct defined by system headers to represent ts_sec and ts_usec. But, on 64bit machines (certainly mine), these values are defined as unsigned long rather than unsigned int (presumably as a step to get over the ‘year 2038 bug’). Hence the packet header is all wrong.

An easy solution is the following patch in mtp.h:

77a78,90

> /*

> * The packet header in the pcap file (used for the CLI command 'dump') is

defined so has to

> * have the two time components as unsigned ints. However, on 64bit

machines, the system

> * timeval struct may use unsigned long. As such, we use a custom version

here:

> */

> struct _32bit_timeval

> {

> unsigned int tv_sec; /* Seconds. */

> unsigned int tv_usec; /* Microseconds. */

> };

>

>

>

125c138

< struct timeval stamp; /* Timestamp */

---

> struct _32bit_timeval stamp; /* Timestamp */

There may be a better way – but this works.

This relates to chan_ss7-1.0.0 from http://www.dicea.dk/company/downloads and I have let them know also. It’s also a known issue for the Wireshark developers (although I did not investigate in detail to see what their resolution was for the future). See the following thread from 1999:

Linux on a Dell Vostro 200

Following a recent post to ILUG asking about setting Linux up on a Dell Vostro 200, I followed up with my notes from the time I had to do it a few months back.

This is just a copy of my notes rather than a how-to but any competent Linux user should have no problem. Apologies in advance for the brevity; with luck you’ll be using a later version of Linux which will already have solved the network issue…

The two main issues and fixes were:

- The SATA CD-ROM was not recognised initially and the fix was set the following parameter in the BIOS:

BIOS -> Integrated Peripherals -> SATA Mode -> RAID - The network card is not recognised during a Linux install. Allow install to complete without network and then download Intel’s e1000 driver from http://downloadcenter.intel.com/ or specifically by clicking here. The one I used then was e1000-7.6.9.tar.gz but the current version appears to be e1000-7.6.15.tar.gz (where the above link heads to – check for later versions).

My only notes of the install just say “essentially follow instructions in README” so I assume they were good enough! Obviously you’ll need Linux kernel headers at least as well as gcc and related tools.

Once built and installed, a:

modprobe e1000should have you working. Use

dmesgto confirm.

Recovering an LVM Physical Volume

Yesterday disaster struck – during a CentOS/RedHat installation, the installer asked (not verbatim): “Cannot read partition information for /dev/sda. The drive must be initialized before continuing.”.

Now on this particular server, sda and sdb were/are a RAID1 (containing the OS) and a RAID5 partition respectively and sdc was/is a 4TB RAID5 partition from an externally attached disk chassis. This was a server re-installation and all data from sda and sdb had multiple snapshots off site. sdc had no backups of its 4TBs of data.

The installer discovered the drives in a different order and sda became the externally attached drive. I, believing it to be the internal RAID1 array, allowed the installer to initialise it. Oh shit…

Now this wouldn’t be the end of the world. It wasn’t backed up because a copy of the data exists on removable drives in the UK. It would mean someone flying in with the drives, handing them off to me at the airport, bringing them to the data center and copying all the data back. Then returning the drives to the UK again. A major inconvenience. And it’s also an embarrassment as I should have ensured that sda is what I thought it was via the installers other screens.

Anyway – from what I could make out, the installer initialised the drive with a single partition spanning the entire drive.

Once I got the operating system reinstalled, I needed to try and recover the LVM partitions. There’s not a whole lot of obvious information on the Internet for this and hence why I’m writing this post.

The first thing I needed to do was recreate the physical volume. Now, as I said above, I had backups of the original operating system. LVM creates a file containing the metadata of each volume group in /etc/lvm/backup in a file named the same as the volume group name. In this file, there is a section listing the physical volumes and their ids that make up the volume group. For example (the id is fabricated):

physical_volumes {

pv0 {

id = "fvrw-GHKde-hgbf43-JKBdew-rvKLJc-cewbn"

device = "/dev/sdc" # Hint only

status = ["ALLOCATABLE"]

pe_start = 384

pe_count = 1072319 # 4.09057 Terabytes

}

}

Note that after I realised my mistake, I installed the OS on the correct partition and after booting, the external drive became /dev/sdc* again. Now, to recreate the physical volume with the same id, I tried:

# pvcreate -u fvrw-GHKde-hgbf43-JKBdew-rvKLJc-cewbn /dev/sdc Device /dev/sdc not found (or ignored by filtering).

Eh? By turning on verbosity, you find the reason among a few hundred lines of debugging:

# pvcreate -vvvv -u fvrw-GHKde-hgbf43-JKBdew-rvKLJc-cewbn /dev/sdc ... #filters/filter.c:121 /dev/sdc: Skipping: Partition table signature found #device/dev-io.c:486 Closed /dev/sdc #pvcreate.c:84 Device /dev/sdc not found (or ignored by filtering).

So pvcreate will not create a physical volume using the entire disk unless I remove partition(s) first. I do this with fdisk and try again:

# pvcreate -u fvrw-GHKde-hgbf43-JKBdew-rvKLJc-cewbn /dev/sdc Physical volume "/dev/sdc" successfully created

Great. Now to recreate the volume group on this physical volume:

# vgcreate -v md1000 /dev/sdc

Wiping cache of LVM-capable devices

Adding physical volume '/dev/sdc' to volume group 'md1000'

Archiving volume group "md1000" metadata (seqno 0).

Creating volume group backup "/etc/lvm/backup/md1000" (seqno 1).

Volume group "md1000" successfully created

Now I have an “empty” volume group but with no logical volumes. I know all the data is there as the initialization didn’t format or wipe the drive. I’ve retrieved the LVM backup file called md1000 and placed it in /tmp/lvm-md1000. When I try to restore it to the new volume group I get:

# vgcfgrestore -f /tmp/lvm-md1000 md1000 /tmp/lvm-md1000: stat failed: Permission denied Couldn't read volume group metadata. Restore failed.

After a lot of messing, I copied it to /etc/lvm/backup/md1000 and tried again:

# vgcfgrestore -f /etc/lvm/backup/md1000 md1000 Restored volume group md1000

I don’t know if it was the location, the renaming or both but it worked.

Now the last hurdle is that on a lvdisplay, the logical volumes show up but are marked as:

LV Status NOT available

This is easily fixed by marking the logical volumes as available:

# vgchange -ay 2 logical volume(s) in volume group "md1000" now active

Agus sin é. My logical volumes are recovered with all data intact.

* how these are assigned is not particularly relevant to this story.